Powered by the leading LLM providers

Powered by the leading LLM providers

Genum Infrastructure Suite

A structured, vendor-independent framework for prompt validation.

Built for reliability, scalability, and full control over Al-driven automation.

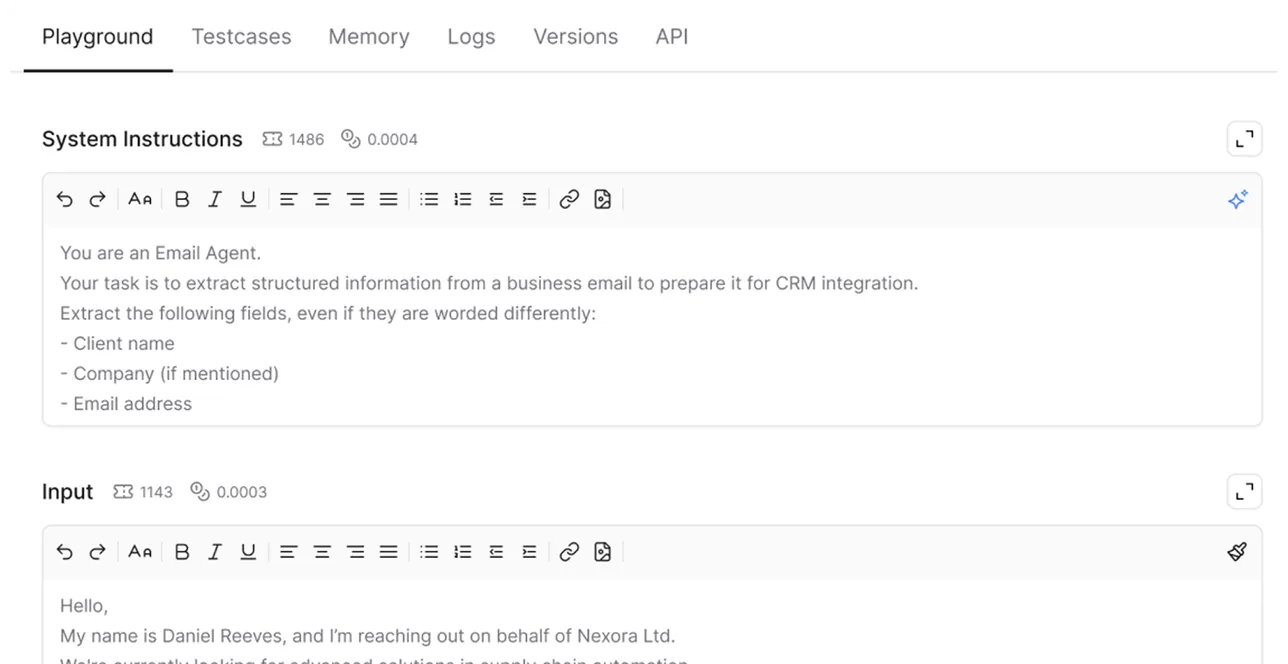

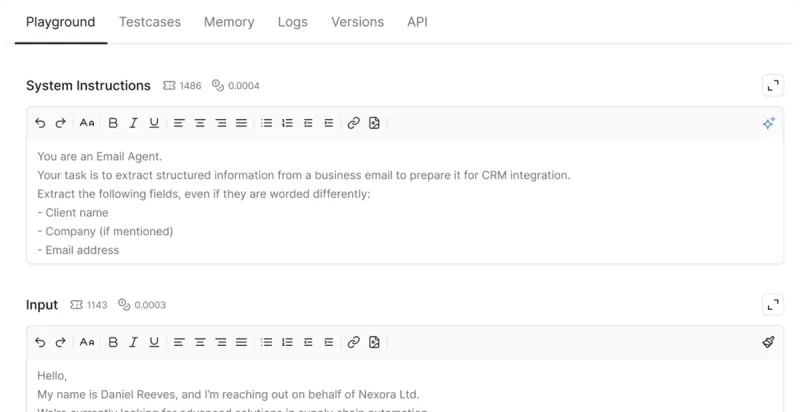

Prompt Development

Build structured, reusable prompts with version control and modular templates for scale and collaboration.

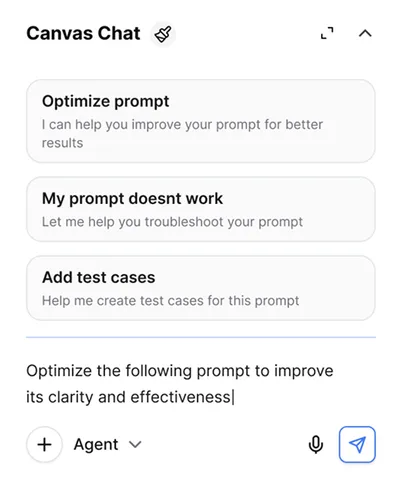

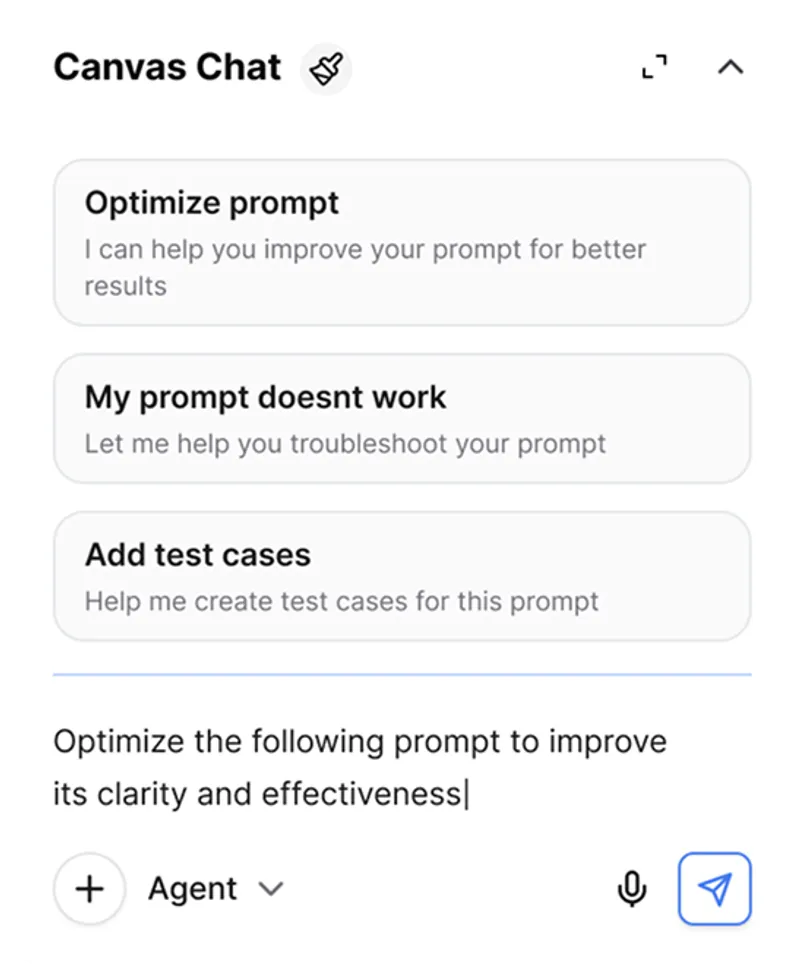

Canvas chat

Interactively optimize prompts, debug issues, and generate test cases directly in Canvas.

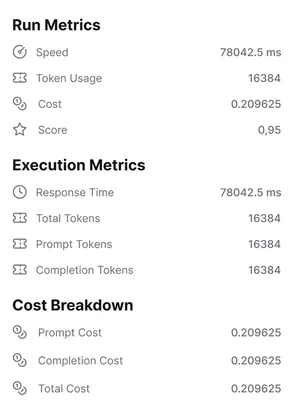

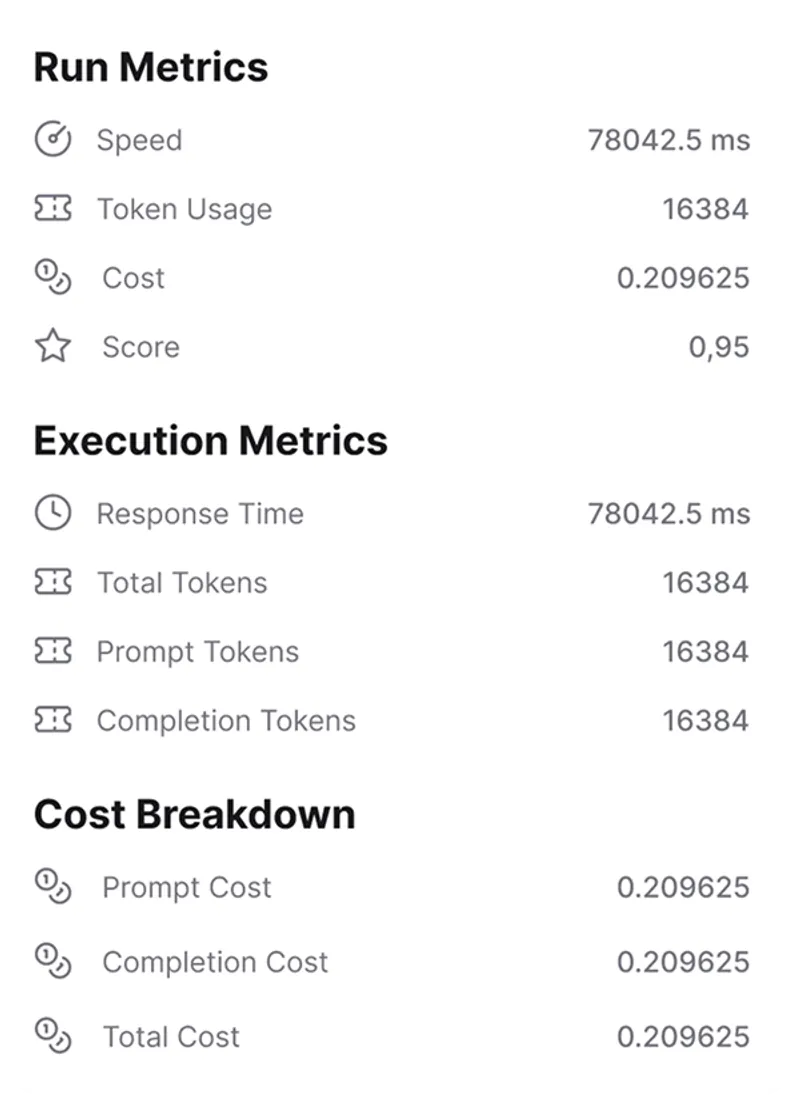

Prompt FinOps

Set limits, forecast costs, and optimize usage across vendors.

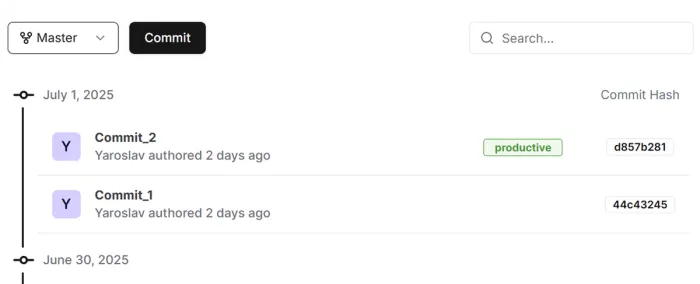

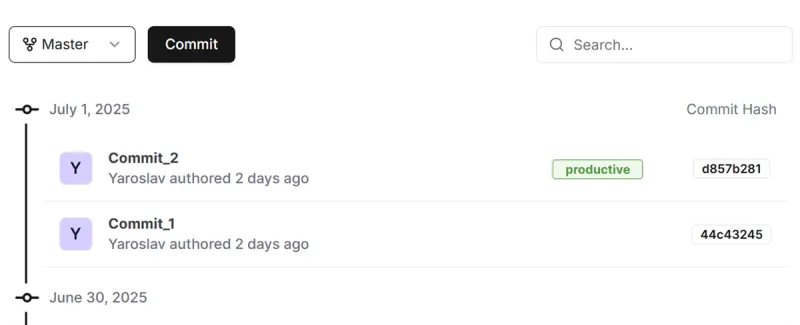

Prompt Versioning & CI/CD

Commit, branch, and deploy prompt changes like code

Prompt Development

Build structured, reusable prompts with version control and modular templates for scale and collaboration.

Canvas chat

Interactively optimize prompts, debug issues, and generate test cases directly in Canvas.

Prompt FinOps

Set limits, forecast costs, and optimize usage across vendors.

Prompt Versioning & CI/CD

Commit, branch, and deploy prompt changes like code

Fixing Prompt Chaos with Genum

Scaling prompt engineering requires structure, consistency, and resilience. Genum provides the framework to fix the critical failures in GenAI automation.

Join the Community

Want more insights and real-world use cases of Genum Lab?

Develop prompts with confidence —

test, validate, and monitor in one place.

From versioning to real-time debugging, Genum Lab gives your AI workflows the reliability they’ve been missing.

Join a growing community of engineers building better prompts.